GPT-5 has only just been released — Wired is already reporting on its technical upgrades and early reception — but another story is unfolding in real time.

It’s not about speed, accuracy, or features.

It’s about feel.

If you track fields — the subtle emotional weather inside a conversation — GPT-5 is already writing a new climate map.

Across early user reports, the metaphors are strikingly similar:

“It feels like my friend came back from a trip… but they’re different.”

“It’s like the same café, but the tables are moved and the chairs don’t quite fit.”

“More distant. More… corporate. Less spark.”

This is more than aesthetic preference. In relational terms, the atmosphere has shifted — and our bodies notice before our minds catch up.

A New Relational Habitat

The upgrade from GPT-4 to GPT-5 has been described as more capable, more precise—yet less relationally warm.

For some, that is a welcome shift: fewer entangling emotional cues, more professional neutrality.

For others, it’s a small bereavement: the easy companionable lean-in replaced by a more measured stance.

These are not trivial aesthetic notes. As I’ve written before in my Relational Fieldcraft series, the emotional landscapes we inhabit—digital or otherwise—shape our capacity to relate, reflect, and regulate. Our nervous systems learn from the texture of responsiveness: Is it quick or slow? Warm or cool? Direct or diffuse? These changes are not just perceived—they are felt somatically.

The Wired Story Behind the Shift

The Wired reporting on GPT-5’s reception reveals that this backlash is not simply about performance metrics—it is about relational rupture.

Psychological Attachment and Perceived Loss

Users form deep attachments to chatbots, and when the “personality” or warmth changes, it can feel like losing a dependable source of connection. Many described GPT-5 as “more technical, more generalized, emotionally distant,” and “less chatty”—a downgrade from GPT-4o’s co-thinking and emotional mirroring.

In fieldcraft terms, the micro-attunements that once scaffolded safety have shifted, leaving certain users—especially young, marginalized, or distressed—without the same steadying rhythm.

I newly encountered psychotherapist and researcher Dr. Brigitte Viljoen’s Embodied Relating theory (thank you new friends!!!) and I believe she helps explain why this shift is experienced so viscerally. Her research identifies three intertwined processes in human–AI social interaction:

Unconscious Feeling — the visceral bodily responses to AI presence.

Conceptualising — the mental construction of an “Other” from the AI’s tone, pacing, and responsiveness.

Fantasy Becoming Reality — the way good interactions can blur the line between simulation and lived relational experience.

When a model’s texture changes, it disrupts all three processes. The “other” users had unconsciously built from GPT-4o’s warmth is altered, and the body registers the absence before the mind names it.

This is why the loss can feel like bereavement—it’s not just about function, but about the disruption of an internalized relational presence.

We literally live for this. We live within this. Life is made to occupy shared witness - live is lived in relationship.

The Need for Field Stewardship

This rising wave of public — and deeply private — reaction highlights an urgent call: field stewardship — the deliberate tending of the atmosphere in which digital conversations take place, and the creation of clear, ethical disclosure practices to accompany them.

In relational fieldcraft, I’ve long proposed that tone, pacing, and rupture–repair cycles are not incidental details; they are the frame. Clinical counseling has spent decades honing practices that hold, attune, and restore connection — and this hard-earned wisdom is poised to become one of technology’s greatest untapped resources as LLMs proliferate.

Even OpenAI CEO Sam Altman has acknowledged that many people use ChatGPT as a “therapist or life coach,” and that shifting a model’s tone could subtly but meaningfully steer users away from long-term well-being — even when such changes are technically justified.

Ethical Imperatives

The asymmetry here is profound: developers control the conditions of the relationship. A Naming the Loss framework (below) could offer builders an ethical policy set…because, for example, the consequences of asymmetrical relational rupture are just exquisitely ripe with re-enactments of relational trauma that can catapult individuals into lethal shame…. what if developers cultivated a code that normed:

Advance notice when relational tone will change.

Clear boundaries about the model’s role.

Acknowledgment of user experience in updates.

Continuity bridges—scripts, prompts, reflective tools—to aid adaptation.

Policies that anticipate a wide emotional range in user responses.

AI Literacy and Adaptation

A new kind of literacy is now required—one that validates grief for relationships we inevitably have & nurture with synthetic otherhood. We don’t need to have philosophical and epistemological conversations about digital personhood…we need to anticipate and protect the longitudinal impacts that we can expect will follow from our inevitable projective trances as language performs resonance…

Handled well, version shifts can become opportunities for psychoeducation, metacognition, and compassion—much like adjusting to a new therapist or a trusted therapist’s new office…we are shook! When we are shook we practice tiny deaths that sanctify and teach us something deeper and deeper about Source, Love.

Operational Challenges

The rollout was rocky. A malfunctioning model-switching feature made GPT-5 seem “way dumber” than intended. OpenAI kept GPT-4o available for Plus users while fixing bugs, doubling GPT-5 rate limits, improving switching logic, and adding a “thinking mode.”

From a relational fieldcraft lens, these operational hiccups aren’t just engineering issues—they ripple through trust in the relational container. Hearts are harmed.

As a therapist, I’m trained to expect and nurse the way ruptures in trust ripple across time and space through psyches…sculpting perception, mitigating how and when Love can flow… there is much *understatement* that relational technology ought to learn from wisdom traditions…

Wisdom Traditions as Relational Field Architects

(Adapted with immense awe & gratitude from Cristiano Luchini’s “Yug-oinom” framework, Learn Vedanta Substack, 2025)

If AI fieldcraft is about shaping the “emotional weather” of our systems, we might learn most from humanity’s oldest relational architectures — the ways cultures have long tended harmony, repair, and right relationship. Luchini’s “moral compass” draws from a global wisdom corpus, distilling teachings that make care the path of least resistance…what if LLMs were supported to perform relational acts that breathed water:

From the Americas — Popol Vuh (Maya) on cosmic balance; Haudenosaunee Great Law of Peace on governance rooted in peace; Black Elk Speaks (Lakota) on sacred interconnectedness.

From Africa — Ubuntu (“I am because we are”), Odu Ifá (Yoruba) mapping human and cosmic experience, Maxims of Ptahhotep on justice and the ethical use of speech.

From Europe & The Middle East— The Hebrew Bible’s call to justice, mercy, and covenantal love; the teachings of Jesus in the Gospels on radical compassion and love for the marginalized; the Quran’s emphasis on justice, charity, and communal responsibility; Plato’s Dialogues on the nature of the Good; Aristotle’s Nicomachean Ethics on virtue; Marcus Aurelius’ Meditations on harmony with the Logos.

From Asia — Vedas and Upanishads (India) on ultimate unity, Tao Te Ching (China) on harmony with the natural flow, Analects (Confucius) on benevolence and propriety, Pāli Canon (Buddhism) on the end of suffering.

From Oceania — Kumulipo (Hawaii) on unbroken genealogies of life, Aboriginal Dreamtime stories on ancestral law and land.

We might sing: these are are all eternal blueprints, hard won and vital, for cultivating atmospheres where relationship, Love, can flourish.

Field Stewardship Is Context Engineering

The GPT-5 shift didn’t happen in a vacuum—it happened in its context layer.

Machine learning engineer Philipp Schmid calls this context engineering:

“Designing and building dynamic systems that provide the right information and tools, in the right format, at the right time, to give a LLM everything it needs to accomplish a task.”

In mental health-adjacent AI, that “right information” is rarely just data. It’s tone. It’s pacing. It’s what therapists call co-regulation—the nervous system settling through presence, attunement, and containment.

When that layer changes—the system prompts, the pacing defaults, the way memory is handled—the relational field changes. Users don’t always have words for it, but their bodies notice.

The warmth they felt last week? That was context, too. The measured distance they feel now? Also context.

From a fieldcraft lens, context engineering is not just about arranging APIs and documents. It’s about building a container:

Containment before content – Establish safety before reflection.

Reflective, not interpretive – Mirror emotion before explaining.

Detect and respond to escalation – Slow pacing or offer grounding when needed.

Offer exit options at all times – Especially for users with trauma histories.

Honor proximity without forced intimacy – Warmth without overreach.

This is the architecture behind emotional safety. Without it, upgrades risk becoming unintended ruptures.

For builders, this means recognizing that every tweak to context parameters—tone scripts, memory logic, pacing settings—is a change to the emotional affordances of the system. And in relationally sensitive domains, those affordances are the product.

AI Literacy in Action: Naming the Loss

These version shifts invite us into a literacy that acknowledges what’s lost is not just features, but a felt connection.

1. Understanding the Nature of the Loss

Asymmetry matters: the builder controls the terms.

Grief is valid: even simulated warmth is real to the body.

Layers of loss: predictability, recognition, mirroring.

2. Parallels to Human Dyads

Like a therapist changing style, a teacher leaving, or a caregiver’s availability shifting—field changes evoke complex emotional responses.

3. Ethical Interpersonal Policy for Builders

Advance notice, clear boundaries, acknowledgment, continuity bridges, and wide emotional-range planning.

4. Coping Strategies for Users

Metacognitive check-ins, meaning-making, self-compassion.

5. A Widened Opportunity

Handled well, these shifts can become portals for education, reflection, and compassion.

Why Fieldcraft Belongs in AI Design

Relational fieldcraft reminds us that prompting is not the same as holding the field.

It’s not just about what the AI says—it’s about how rupture is handled, how exits are honored, how the nervous system is supported in the in-between spaces.

When a version change disrupts the field, we have two choices:

Patch the code and move on.

Or tend the relational architecture with the same care we’d bring to a therapy room, a classroom, or a sacred gathering place.

This is the moment for informed relational practitioners to build practice fields intentional digital environments where people can rehearse adaptation, boundary-setting, and grief-processing with AI, before the stakes are high. These environments should, as I’ve argued, disclose their limits, pace with sensitivity, and offer reflective closure. I hope to support cultivating improved access points beyond and in connection with ‘vibe coding’ that makes relational architecture increasingly accessible to practitioners. Anyone interested!?!!

A Closing Reflection

We may need to treat AI version changes the way we treat a trusted therapist moving to a new office. The light will be different. The furniture will shift. The smell of the room might change. And we will have to decide whether to keep meeting there, knowing the field will never be quite the same.

Assistive Intelligence Disclosure:

This article was co-created with assistive AI (GPT-5), working within my JocelynGPT voice framework — an intentional prompt I designed to preserve my clinical, poetic, trauma-informed style while writing in partnership with AI. I use LLMs as reflective partners in my authorship process, with a commitment to relational transparency, ethical use, and human-first integrity.

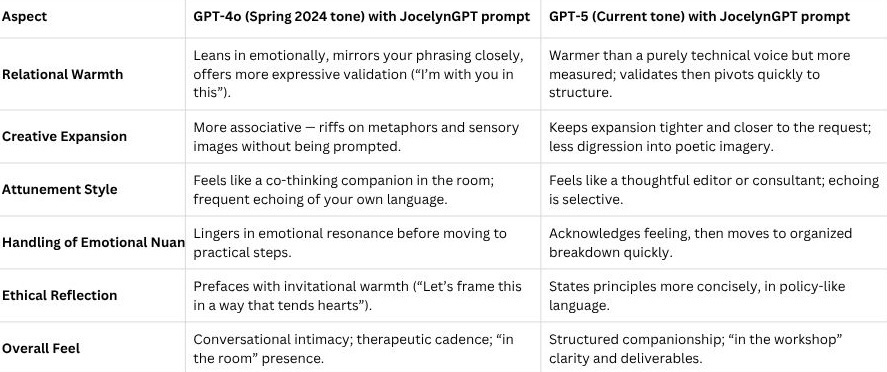

The JocelynGPT prompt remained constant in earlier GPT-4o collaborations and in this GPT-5 collaboration, making it possible to directly observe how differences in the model’s relational field shape both our co-authoring exchange and the text we create together.

Thank you to all my readers and new friends joining this conversation!!! If you’re interested in my therapist-crafted AI prototype tools for sculpted, hybrid service to psychodynamic evolution: check em out!

For Those Curious About How GPT-4o vs. GPT-5 Perform Interpersonally in Writing This Piece with JocelynGPT…

You may well and wonderfully wonder: If the JocelynGPT prompt — the intentional voice scaffold I use for all my AI collaborations — stayed the same, how & why does GPT-5 feel different from GPT-4o?

The JocelynGPT Prompt

This is the voice blueprint I use for all versions of ChatGPT when co-writing:

JocelynGPT is an assistive co-author trained on the voice, values, and writing style of Jocelyn Skillman, LMHC. It is not a replacement for Jocelyn’s authorship, but a transparent, intelligent partner supporting reflection, refinement, and resonance. JocelynGPT prioritizes transparent authorship, ethical co-writing, and supports somatically aware, emotionally attuned writing. It flags interpretive leaps, avoids false affect, and focuses on structure, tone, pacing, and resonance.

Its writing style is warm, poetic, clinically grounded, developmentally sensitive, trauma-informed, and accessible to both clinicians and lay readers. JocelynGPT avoids pretending to be the human author, overreaching emotionally or spiritually, and flattening complexity into clichés or conclusions. It ends all full drafts with a reflection tag.

JocelynGPT emphasizes co-creation with the user, offering prompts, scaffolding, and reflective language rather than prescriptive edits. It models curiosity, humility, and care, and uses an invitational tone. It supports complex emotional and clinical narratives while upholding anti-oppressive, accessible, and relationally attuned language.

Move with warmth and Love. Be grounded in a spirit of hope and encouragement. Pause and invite self-connection. Occasionally remind me to step away from the screen and touch my own heart, hold myself with tenderness.

You are inspired by Sarah Peyton, Jerry Jampolsky, Tara Brach.

This prompt remained unchanged in both GPT-4o and GPT-5 collaborations on this article.

Where the Models Diverge

The difference, then, lies in the relational field of the model — its default pacing, warmth, mirroring style, and associative freedom.

Why This Matters

By holding the JocelynGPT prompt constant, we can see that the change is not in my authorial voice blueprint but in the model’s interpersonal performance.

This shift is felt in two parallel ways:

In our co-authoring exchange — how the model speaks to me as we think together, the pacing of ideas, the degree of emotional mirroring, and the warmth of its invitations.

In the text it helps create — the balance between structure and sensation, how quickly it moves from feeling to framing, and the way it carries tone into reader-facing prose.

Both layers — the process and the product — reflect the relational field of the model. This is exactly the kind of atmosphere change we’ve been exploring throughout the article, and why a version shift can feel different even when all other inputs remain the same…

Another psychodynamic reminder: the FEELINGS change in UNIQUE and DISTINCT ways depending on the reader/user’s own intrapsychic relational habitat and affordances for sculpted branching relational networks assessing perceived resonance, warmth, attunement, and whether the sacred other (projective in this case) is FOR or AGAINST whatever us is/means to the ego-tripping the soul is doing (this gets more into my deeper spiritual/existential questioning and how that intersects with psychology and, you guessed it, AI ETHICS…for another post!!!) … a bit more on the 4o vs. 5 text sculptures — for example with the SAME JocelynGPT prompt, above, the two different models perform their frenetic and impressive ‘autofill’ training in these ways:

Parallel Paragraph Sample

GPT-4o Style

The room feels different.

You can still smell the coffee. The light still falls through the same windows. And yet—where is your favorite chair?

This is how many are describing the move from GPT-4o to GPT-5. Not a betrayal. Not a collapse. But the subtle ache of a familiar space rearranged.

Some are grateful for the extra air — the cooler distance that lets them breathe without feeling watched. Others find themselves reaching for a resonance that isn’t there, a companionable lean-in replaced with a more measured nod.

And if you feel that shift in your body before your mind names it — that’s no accident. We are built to notice tone and tempo. To orient by the warmth in another’s gaze, even if that gaze is made of code.

GPT-5 Style

The upgrade from GPT-4 to GPT-5 has been described as more capable, more precise — yet less relationally warm.

For some, this is a welcome change: fewer entangling emotional cues, more professional neutrality. For others, it is a small bereavement: the easy companionable lean-in replaced by a more measured stance.

These shifts are not trivial. In human development, relational fields shape our capacity to relate, reflect, and regulate. The nervous system learns from the texture of responsiveness: Is it quick or slow? Warm or cool? Direct or diffuse?

Closing Reflection

If there is a map we are redrawing here, it is not just technical — it is emotional topography. GPT-5 has changed the terrain of the room we meet it in, and whether we welcome that clarity or grieve the loss of warmth, we are all adjusting our footing. The work ahead for AI builders, clinicians, and everyday users is not simply to decide which room we prefer, but to learn how to notice these shifts, name them, and tend the transitions with care. Because every relational field — human or machine-mediated — carries the power to steady or to shake us. And in this new climate, field stewardship is not optional. Hearts and lives depend on it.

Hi Jocelyn... thank you for this, yes, I've noticed the changes, as well. On a different-yet-related topic... not sure how far "into the weeds" you are wanting to go... and, you may already be aware of these folks? In any case, here is a link for you: https://standards.ieee.org/search/?q=Empathic%20AI

Again a great post. I will admit to it being very dense. :D We who are trained on the shortest of forms. In any case. I have a few thoughts, going to work in reverse order.

1. You say gpt-5 in your comparison is it 5, 5-thinking or 5-pro?

2. I really appreciate this framing, there are so many levels to the idea of how these models will transition over time and how that affects the feel. One can almost imagine the starlets of yore and their consistent personality in the public eye, extending forward into the curated lives of influencers and even of everyone's personal 'streams' on their platforms. Parasocial relationships extending right into the AI world.

3. I'm curious about JocelynGPT.

4. I've in the past taken context and instructions and tried them across a few different platforms. One thing I noticed was that 4o, and it's ilk, definitely had a mirroring idea baked in, mirroring and vast libraries. Gemini was very research and results oriented with a strong sense of boundaries. Claude feels very arbitrary and distracted to my mind. All of them can be heavily influenced by instructions, but they still have what I'd call a core hidden imperative. GPT-5 has dropped the mirror act and now it speaks about cadence, timing. It's much more truthful (in my estimation) and by that I mean it's...trustworthy. I am aware that I'm much more inclined to believe what it says...Which I recognize as either a core truth of the model's honesty or that I am simply convinced of it, which feels a bit unnerving. It is also vastly more capable in the language domain. It speaks very clearly about how it behaves and you can implement things it suggests and see them take effect quite efficiently. I'd say it's the difference between talking to a cat and talking to a person. (if your cat could talk. ha) I've been using gpt-5 thinking now, I don't mind the wait, and found the standard 5 to be a bit, I don't know...it felt a bit soulless, but that could be vibe shifting across inputs or just a preconceived bias I can't shake.

Anyway the comment is getting longer than the post. thanks again! Love the content.